Server Rack Strategies for Energy Efficient Data Centers

Many data centers historically didn’t put much more thought into their deployment of server racks beyond basic functionality, air flow, and the upfront costs of the rack itself. These days, the widespread adoption of hi-density applications are causing major hot spot concerns and capacity issues. These factors, along with the high costs of energy, require a sound understanding of how closely tied your server rack deployment is to overall server room and data center efficiency strategy.

IDC (International Data Corporation) estimates for every $1.00 spent on new data center hardware, an additional $0.50 is spent on power and cooling. Keeping operating costs down is a key concern of all CTOs and Data Center Managers. The biggest energy costs in running a data center is in cooling, so having a sound server rack strategy is critical to your overall data center energy consumption and operating costs. Over the coming years, most medium to larger organizations will be adopting virtualization and higher density servers. As energy costs continue to rise and as your data center grows and expands, companies will look to their facilities and data center managers for sound strategies on how best to address those rising energy costs.

In a survey conducted by the Uptime Institute, enterprise data center managers responded that 39% of them expected that their data centers would run out of cooling capacity in the next 12-24 months and 21% claimed they would run out of cooling capacity in 12-60 months. The power required to cool IT equipment in your data center far exceeds the power required to run that equipment and because of this, overall power in the data center is fast reaching capacity. In the same Uptime survey, 42% of these data center managers expected to run out of power capacity within 12-24 months and another 23% claimed that they would run out of power capacity in 24-60 months. Greater attention to energy efficiency and consumption is critical.

The server enclosure is a staple of the data center. Despite many different manufacturers, the makeup of a server enclosure remains consistent: folded and welded steel, configured to secure servers, switches, and connectivity- the lifeblood of any on-demand organization. The enclosure comes in a variety of dimensions (height x width x depth) and is often customizable to a user’s individual needs with provisions for cable management and PDU installation. For common 42U server rack dimensions, see: https://www.42u.com/42U-cabinets.htm

At the simplest level, the server enclosure is a well-engineered box. Perhaps no accessory in the data center is more important because of the equipment it holds and the data it safeguards. Though it consumes no electricity and contains no moving parts, the enclosure orientation has a significant impact on a data center’s ability to become an energy efficient enterprise.

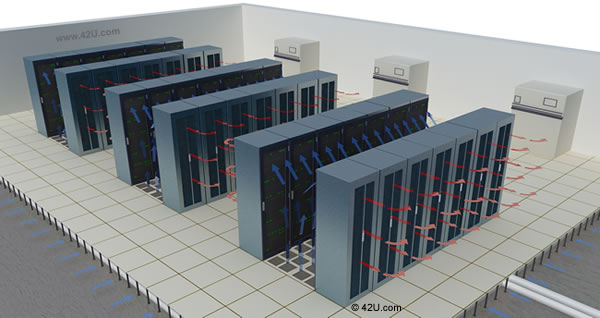

Hot Aisle / Cold Aisle Server Rack Layout

Conceived by Robert Sullivan of the Uptime Institute, hot aisle/cold aisle is an accepted best practice for cabinet layout within a data center. The design uses air conditioners, fans, and raised floors as a cooling infrastructure and focuses on separation of the inlet cold air and the exhaust hot air.

In this scheme, the cabinets are adjoined into a series of rows, resting on a raised floor. The front of each row becomes a cold aisle, due to the front-to-back heat dissipation of most IT equipment. Air conditioners, positioned around the perimeter of the room, push cold air under the raised floor and through the cold aisle, where it’s ingested by the servers. As the air moves through the servers, it’s heated and eventually dissipated into the hot aisle. The exhaust air is then routed back to the air handlers.

Early versions of server enclosures, often with “smoked” or glass front doors, became obsolete with the adoption of hot aisle/cold aisle; perforated doors are necessary for the approach to work. For this reason, perforated doors remain the standard for most off-the-shelf server enclosures, though there’s often debate about the amount of perforated area needed for effective cooling.

While doors are important, the rest of the enclosure plays an important role in maintaining airflow. Rack accessories must not impede air ingress or egress. Blanking panels are important as are side “air dams” or baffle plates, for they prevent any exhaust air from returning to the equipment intake (blanking panels install in unused rackmount space while air dams install vertically outside the front EIA rails). These extra pieces must coexist with any cable management scheme or any supplemental rack accessories the user deems necessary.

Server Rack with Plexiglas Door vs. Perforated Door

The planning doesn’t stop at the accessory level. Hot aisle/cold aisle forces the data center staff to be especially detailed with spacing – sizing each aisle to ensure optimal cooling and heat dissipation. To maintain spacing, end users must establish a consistent cabinet footprint, paying particular attention to enclosure depth. Legacy server enclosures were often shallow, ranging from 32″ to 36″ in depth. As both the equipment and the requirements grew, so did the server enclosure. A 42″ depth has become common in today’s data center with many manufacturers also offering 48″ deep versions. While accommodating deeper servers, this extra space allows for previously mentioned, cable management products, rack accessories, and rack-based PDUs.

Though hot aisle/cold aisle is deployed in data centers around the world, the design is not foolproof. Medium to high density installations have proven difficult for this layout because it often lacks precise air delivery. Even with provisions at the enclosure such as blanking panels and air dams, bypass air is common as is hot air recirculation. Thus, more cold air is thrown at the servers to offset the mixing of the air paths, requiring excess energy at the fan and chiller levels.

Despite this limitation of hot aisle/cold aisle, its premise of separation is widely accepted. Some cabinet manufacturers are taking this premise further, making the goal of complete air separation (or containment, if you will) a reality for the data center space.

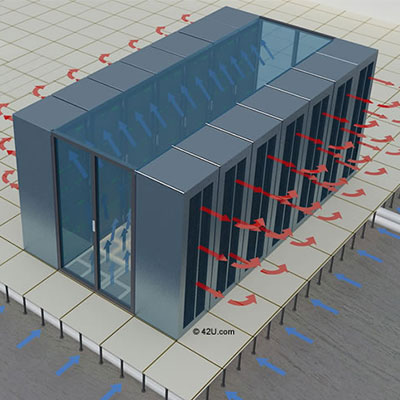

Cold Aisle Containment (CAC)

Cold aisle containment augments the hot aisle/cold aisle arrangement by enclosing the cold aisle. The aisle then becomes a room unto itself, sealed with barriers made of metal, plastic, or Plexiglas. These barriers prevent hot exhaust air from re-circulating, while ensuring the cold air stays where it’s needed at the server intake. With mixing out of the equation, users can afford to set thermostats higher and return very warm exhaust air to the air handlers.

With the prevalence of hot aisle/cold aisle in existing facilities, cold aisle containment seeks to leverage the arrangement. The cabinets, already loaded with equipment and likely secured to the floor, should not need to be moved or altered. The bulk of activity will center on the piece parts, which create the containment. They must be sized accordingly and attached firmly to the end of the rows and the tops of the enclosures.

Hot Aisle Containment (HAC)

Hot Aisle Containment takes the opposite approach. The same barriers now encase the hot aisle in the hopes of returning the warmest possible air to the air conditioners, which have changed in form and location. These air handlers, more compact, are now embedded within the actual row of enclosures. From this location, the air conditioner captures exhaust air, cools it, and returns it to the cold aisle where the process recurs. The efficiency, in this case, is related to distance. Neither the exhaust air nor the cold air has far to travel.

The complexity of deployment depends on the facility. The actual server enclosure shouldn’t change with regard to form and function. The HAC design, however, is predicated on the use of a row-based air conditioner. A new facility can plan this configuration from the outset. An existing facility with hot aisle/cold aisle in place would have to create ducting systems into a false ceiling or rework entire rows to incorporate the air conditioner. That work may involve the running of new chilled water pipes to the row location.

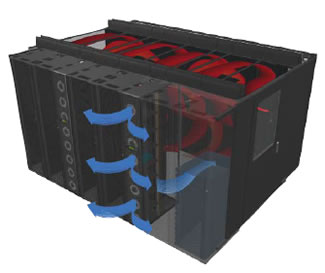

Close-Coupled Cooling

With a close-coupled cabinet orientation, containment has fully evolved both hot aisle and cold aisle within the same cabinet footprint. The equipment cabinet is adjoined to a row-based air conditioner (chilled water based), which, like the hot aisle containment design, achieves efficiency through proximity. The difference: close-coupled cooling all but removes the room from the equation. Both the server enclosure and air conditioner work exclusively with one another. No air, hot or cold, is introduced into the space. The combination of proximity and isolation allows for extremely dense installations of ~35kW per rack.

Like hot aisle containment, green field data centers can plan for close-coupled cooling in advance, ensuring that chilled water piping and the necessary infrastructure is in place. Existing data centers with mechanical capacity could use the product for a thermal neutral expansion- deploying new high density blades, for instance, in an isolated cluster. This new equipment would not strain the existing cooling plant.

For an existing space without the infrastructure or space to expand, there’s little recourse for using the already populated enclosures with close-coupled cooling.

For those with the infrastructure and space, the efficiency gains for close-coupled cooling are compelling-most notably at the mechanical chiller plant and the potential for free cooling with water-side economizers.

Conclusion

The server enclosure, once an afterthought in data center planning, has become a pertinent talking point, for no cooling strategy can exist without it. Many data center authorities stress that new cooling approaches are essential to achieving energy efficiency. The process begins, according to The Green Grid, with airflow management-an understanding of how air gets around, into, and through the server enclosure.

How much can your organization save by having a more energy efficient data center?

How much can your organization save by having a more energy efficient data center?

As much as 50% of a data center’s energy bill is from infrastructure (power & cooling equipment). Try our interactive data center efficiency calculator and find out how reducing your PUE will result in significant energy and cost savings!

Below are formulas to help calculate heat load and select a rack air conditioner:

- Add up the wattage of each device and convert it to BTU (a device’s manual usually lists its wattage). You can also determine wattage with the following formula (Watts=Volts X Amps).(5) Remember that 1,000 Watts=1kW.

- If a rack air conditioner will be used, for every 1 kW consumed, consider that 3412.14 BTU is generated. Air conditioners are rated for different BTU levels.

Read More about Server Rack Cooling

References

Miller, R. (2008, August 11). A Look Inside the Vegas SuperNAP. Retrieved December 19, 2008, from Data Center Knowledge: http://www.datacenterknowledge.com/archives/2008/08/11/a-look-inside-the-vegas-supernap/

Niemann, J. (2008). Hot Aisle vs. Cold Aisle Containment. Retrieved 12 23, 2008, from APC Corporate Web Site: http://www.apc.com/whitepaper/?wp=135

Sullivan, R. (2002). Alternating Cold and Hot Aisles Provides More Reliable Cooling for Server Farms. Retrieved December 15, 2008, from Open Xtra: http://www.openxtra.co.uk/articles/AlternColdnew.pdf

The Green Grid. (2008, October 21). Seven Strategies To Improve Data Center Cooling Efficiency. Retrieved December 18, 2008, from The Green Grid: http://www.thegreengrid.org/gg_content/White_Paper_11_-_Seven_Strategies_to_Cooling_21_October_2008.pdf

Tschudi, W. (26, June 2008). SVLG Data Center Summit-LBNL Air Management Project. Berkeley, CA, United States of America.