Data Center Energy Efficiency Best Practices

Because of the historical unimportance of energy efficiency and the fact that energy expenses flow through the facilities team, some data center managers don’t yet grasp the significance of data center energy costs. The following information can then be used to create an estimate of the range of savings that various energy efficiency programs have the potential to yield.

Self-Funding Efficiency Program Model

A systematic, phased approach minimizes initial investment requirements. This approach first establishes a baseline consumption value. Efficiency gains are then measured against the baseline resulting in measurable results. A variety of low-cost/no-cost measures can be undertaken in the initial phases that yield significant savings. The resulting savings are then used to fund more sophisticated efficiency programs.

42U efficiency solutions, backed by a team of efficiency experts, establish the baseline for measuring ROI on efficiency projects. By measuring ongoing efficiency gains, efficiency programs can be cost-justified and deliver documented savings.

Efficiency Messaging

The efficiency programs you institute can be used to communicate a positive message both internally and externally. Due to the substantial size of the savings, the energy efficiency programs you undertake can have a significant bottom line impact on your business. Internally, the data center team can deliver excellent bottom line results to the CIO who can deliver those results to the executive team as well as the board.

Externally, efficiency initiatives can yield positive press and satisfy larger corporate objectives. Green initiatives are hot today and data center efficiency programs are green. These efficiency efforts can be positioned as green initiatives.

General Considerations

General Considerations

The dynamic nature of a data center makes maintaining an efficient configuration a challenging effort. The sheer volume of projects in play in the typical data center can make the added effort of efficiency take second billing to the core objective of availability. Having established procedures and policies ensures any project comprehends efficiency impacts and ensures data center efficiency is maintained.

Another major consideration is longitudinal planning. Investing the time and effort to improve visibility of changes to computing needs will yield benefits in the form of continued energy efficiency. This approach allows rightsizing of the infrastructure, which has the potential to reduce the energy expense by as much as 50%. The use of modular component technologies enables scaling the infrastructure in concert with application growth.

Including the energy impacts in planning for new applications was not cost effective, until recently. The cost of power coupled with capacity limitations has elevated the importance of energy efficiency planning. This additional effort not only reduces energy costs, it yields long term benefits in both reduced capital and operational expenses.

The following sections describe specific areas to examine for efficiency gains. Following these general descriptions is a section that describes the potential range of energy savings of ten efficiency strategies.

System Design

How the overall data center is designed has one of the most significant impacts on data center efficiency. Two data centers with the exact same set of equipment can have very different energy bills based on how they are configured. The assumption that focusing on individual component efficiency results in an efficient system is flawed. The interaction of the components must be considered to achieve peak efficiency.

System Redesign

The reality is that most data centers are living organisms that have grown over time. The original design considerations have become obsolete as the core business the data center supports has moved to meet market demands. In this situation design and planning have to consider existing conditions. These include the installed base of technologies, their ages, and the ROI on upgrading some or all subsystem components within the larger system.

Newer technologies are typically more power efficient. This efficiency gain must be weighed against the service life of the existing technology as well as potential training requirements for new technology operation and maintenance. For example, best-in-class UPS systems have 70% less energy loss than legacy UPS systems at typical loads.

Airflow Management

Airflow dynamics can be improved through a few simple efforts. These airflow management improvements decrease server inlet temperatures while increasing the CRAC return air temperature which improves efficiency and reduces energy consumption.

Inside racks the open areas should be covered so that air entering the rack passes through the IT equipment rather than around it. Blanking panels are an inexpensive solution that covers the vacant sections inside of the rack.

Unstructured cabling can constrain exhaust airflow from equipment in racks. The best practice is to use a structured cabling system where excess cable is eliminated. In addition to removing unused cabling, cutting cables and power cords to the correct length will provide more room for air to flow away from the back of the rack.

Closing off gaps in other parts of air plenums will also improve cooling efficiency. This can be accomplished using devices such as floor grommets to seal areas where cables enter and exit plenums. A significant improvement in air pressure can be realized by installing this type of simple equipment.

Sub-floor obstructions are another potential area where airflow can be improved. Many data centers use the sub-floor plenum for more than just airflow. A physical examination of the entire plenum can reveal blockage issues that if rectified will improve efficiency. Taking fixed obstructions into account will also yield a more efficient floor tile arrangement, discussed below.

Cooling Coordination

Most data centers have multiple air conditioning systems. In some cases these systems have set points that compete or conflict. One system may dehumidify while another humidifies, or some systems may actually be heating air. The various cooling technologies in the data center need to be coordinated. Expert analysis is often required to diagnose and remediate these types of conditions.

Tile Management

This aspect of efficiency should be straight forward, but this is not often the case. Determining the right number, type and location of vented tiles in a data center requires specific ASHRAE expertise, computational fluid dynamics (CFD) software or some form of real-time instrumentation. New technologies do provide substantial help in this area.

Investing time in this aspect of data center management ensures overheated, or under-cooled, areas of the data center are corrected. Comprehensive tile analysis also allows CRAC location optimization.

Floor Layout

How equipment is arranged on the floor has a major impact on energy efficiency in your data center. The most important aspect of this is relates to cooling. A best-practice layout will improve the efficiency of air flow, which has a direct impact on the amount of fan energy needed to direct cooled air to the equipment that needs to be cooled.

The fundamental best-practice is hot/cold aisle arrangement. This technique improves efficiency in several ways. It allows you to arrange cooling equipment in locations where it will establish the most efficient airflow. Proper design establishes the cool air being delivered to the IT equipment as directly as possible as well as having the heated air returning to the cooling equipment with a minimum of mixing of hot and cold airflows.

Air Containment

The next level of sophistication in data center efficiency is to contain the airflow in the cold and hot aisles to avoid mixing of air across or around aisles. There are two primary types of containment models; hot-aisle and cold-aisle containment. While both models ultimately contain both types of aisles, as the names imply, each technique optimizes for the physics of airflow for a specific type of aisle.

In simple terms, hot aisle containment is designed to evacuate heated air and direct it back to the air conditioning equipment as efficiently as possible. Cold aisle containment focuses on directing the outlet air from the air conditioning equipment as efficiently as possible to the front of the racks in the cold aisle. Studies indicate that cold-aisle containment is considerably more efficient than is hot-aisle containment.

Close Coupled Cooling

Historically, data center managers could use rack load design points and assume this design point applied to every rack. This allowed them to calculate cooling and other infrastructure requirements using averages and relatively simple math. The subsequent cooling system design simply flooded the entire data center with cooled air.

Today’s reality is very different. Virtualization, blade servers and other technologies have created a situation where one rack can have a 3kW load and the next rack a 30kW load. In a large data center these high density racks can be distributed in an unpredictable pattern across the floor.

Close-coupled cooling solves this challenge by allowing high density racks to have supplemental cooling that is dedicated to the rack or series of racks. This type of solution improves efficiency because it employs liquid cooling technology, shortens the air paths and eliminates mixing of cold and hot air streams.

An area of concern related to liquid cooling is delivery of liquid to the systems. Sound engineering practices ensure this concern is addressed. Insulation, accessibility, isolation and leak detection systems are some of the key design factors that ensure a safe and reliable liquid cooling solution. Also, the data center has already proven liquid cooling can be managed when they deployed water-cooled mainframes.

Economizer Operation

In many geographic locations economizer technologies can realize substantial energy savings. Many air conditioner technologies offer economizer options, but this mode is often disabled or the equipment is not correctly configured to take advantage of this mode of operation.

Economizers leverage the outside ambient air conditions to reduce or eliminate mechanical cooling. There are two types of economizer technologies; air-side and water-side. Air side economizer operations circulate outside air through the cooling system when the ambient air is at or below the CRAC outlet temperature setting. The air must still be properly conditioned and filtered to ensure proper humidity and particulate levels are maintained. In areas near bodies of salt water, salinity of the outside air must be reduced to avoid corrosion issues.

Water side economizers use ambient external air to chill water or coolant when the air temperature is low enough to chill the water to the target temperature, typically 45 degrees Fahrenheit. This type of technology includes an appropriate heat exchanger as well as sensor technologies that redirect the fluid flow through the heat exchanger and turn off the mechanical chiller.

Server Replacement Policy

Some amount of server consolidation can be achieved using an ongoing replacement policy. This can be particularly effective in large data centers where there is a relatively regular flow of equipment that is replaced due to failures or support expiration. The policy identifies which energy efficient systems should be used as replacements. For example, a retired four-way server can be replaced with a two-way dual-core server.

Consolidation

A formal consolidation effort will result in additional savings. This effort is designed to identify underutilized, idle or unused servers that can be decommissioned. Assessing all servers in the datacenter and determining their utilization rates will uncover servers that are doing nothing as well as systems performing single, infrequent or limited tasks. Consolidating these to a fewer number of servers will eliminate systems.

Virtualization

Advances in software have abstracted operating systems to a point where a direct physical relationship is no longer required. Applications can run on a virtual server using software that mimics operating system behaviors for the given application. The virtualization software manages the hardware requirements in a similar fashion, placing an operational layer above the actual physical servers. This allows more flexible management of the hardware devices as well as more efficient utilization rates.

Estimating Best Practice Energy Savings

The following information describes several efficiency best practices to consider. 7 Each best practice indicates the range of achievable energy savings. There are wide ranges of savings due to the degree of variation in specific technologies and data center designs.

This information can be used in conjunction with the Estimated Energy Expense calculation to estimate the potential savings for a specific data center. This data is intended to be used to begin the discussion of energy efficiency programs.

The 42U team can mature this estimated data into a reliable baseline that can be used to document measurable ROI. 42U solutions can reduce energy expenses by between 20-50%6 for low-cost/no-cost improvements and up to 80% for systematic efficiency efforts.

Blanking Panel Installation – 1-2%

This is a low-cost program that can reduce energy expense by 1-2%. This can be done in any data center. Blanking panels are a fundamental airflow control strategy that improves airflow through equipment and avoids inefficient airflow around the equipment needing cooling. This practice decreases server inlet temperatures as well as increases the CRAC return air temperature, both of which improve operational efficiency.

Floor Plenum Management – 1-6%

This is another low-cost program for raised floor data centers that can reduce energy expense by 1-6%. Vented tiles are incorrectly located or sized in many data centers. Due to the complexity of airflow behavior, the correct configurations are not readily obvious. A professional assessment will ensure optimal results.

Open spaces around cable feeds in the floor plenum should also be sealed with grommets. This improves plenum air pressure and further reduces bypass airflow.

Floor Layout Planning – 5-15%

With correct cabling this can be a no-cost effort that can reduce energy expense by 5-15%. This best practice is to arrange racks in alternating hot and cold aisles with rack fronts and backs facing one another, coupled with efficient air conditioner locations.

Without structured cabling, and possibly for other reasons, this may be a difficult or impossible retrofit. It is best applied to expansions and new designs.

Aisle Containment Systems – 5-10%

In data centers with hot/cold aisle arrangements containment systems can reduce energy expense by 5-10%. This is a lower-cost solution that contains the airflow and directs it directly to the equipment in an efficient manner. Containment can focus on either the hot aisle or the cold aisle, but cold aisle containment has been proven to be more efficient.

Air Conditioner Coordination – 0-10%

An assessment of the settings and interaction of multiple CRACs in a data center can reduce energy expense by 0-10%. The sole expense for this program is a professional assessment. Ensuring that units are not working against one another eliminates gross energy waste.

Power Equipment Efficiency – 4-10%

Refreshing older UPS systems with new best-in-class technology can reduce energy expense by 4-10%. Newer technologies have 70% less losses than legacy systems at typical load levels. A focus on light load efficiency, not peak load efficiency, is the key parameter because this is the typical operating state for the UPS.

Economizer Systems – 4-15%

In some geographic locations economizers can reduce energy expense by 4-15%. This type of solution is difficult to retrofit and requires a professional assessment to determine how cost-effective this solution is for a given data center.

Air Conditioner Architecture – 7-15%

For higher-density environments this approach can reduce energy expense by 7-15%. This approach reduces the air paths requiring less fan power and eliminating mixing of air. This can be implemented as row-oriented cooling or close-coupled cooling depending on the number of high density rack involved.

Virtualization – 10-40%

This solution has a very significant impact that can reduce energy expense by 10-40%. While not technically a physical infrastructure solution, it involves consolidation of application onto fewer servers.

Infrastructure Right-Sizing – 10-30%

This solution can result in energy savings of 10-30%. This deploys a modular approach to power and cooling architecture that allows scaling these aspects of the infrastructure to the specific needs of the data center. The savings comes in the form of eliminating over-provisioning.

Planning For Efficiency

Due to the complex interactions of best practices, estimating the net impact of multiple programs is difficult. From a business best practice perspective, 42U advises clients to begin by establishing an energy expense baseline and then implementing each program in an orderly, measurable process. The low-cost/no-cost efforts should be performed first to build momentum for the more complex and sophisticated programs. The savings realized can be used to fund the more advanced solutions.

Energy Program Cost Justification

While a formalized and measurable program should ultimately be defined, early discussions can be facilitated by applying the projected savings above to the Estimated Energy Expense <some link hyperlink to E3 page> to have a working number for the savings potential. This provides insight into the potential of energy efficiency programs.

Summary

The importance of energy efficiency in the data center is a relatively recent realization that is only now dawning across the data center landscape. Costs as well as environmental concerns are forcing the data center management community to give more consideration to energy efficiency programs. The information above helps data center managers to quantify the value of various efficiency programs.

42U helps data center managers to identify the efficiency programs that are most appropriate for a specific data center and define a phased plan for implementing those programs. For clients interested in cost-justifying efficiency programs, 42U establishes an energy expense baseline and assists clients in instrumenting data centers to allow linear tracking of energy consumption, enabling computation of a reliable ROI as each efficiency best practice is implemented.

Footnotes

- The $0.40kWh figure comes from a specific engagement with a client who pays this rate for a data center located in mid-Manhattan.

- Murphy J., “Building a Green Data Center,” Robert Frances Group, 2007 – Page three displays a graph showing average cost/kWh at $0.10 in 2006. Other reports referenced here indicate the annual increase has driven the average to $0.12. For energy costs by state: http://www.eia.gov/electricity/state/. This site indicates the national average is $0.098/kWh, but it includes many low-cost, low-density states that distort the average for more populated areas. The site also shows state-by-state averages, so you can use state-specific data when doing the calculation. ftp://ftp.compaq.com/pub/products/servers/Whitepaper…

- Scaramella, J., “Enabling Technologies for Power and Cooling,” Technical Brief, IDC 2006 – Page three projects power consumption per rack moving from 1kW in 2000 to 6-8kW in 2006 and above 20kW in five years (2011). ftp://external.hp.com/pub…

- Rasmussen, N., “Implementing Energy Efficient Data Centers,” APC, 2006 – Bottom of page three uses the $0.12/kWh data point and estimates the annual electrical cost per kW of IT load to be $1,000 and posits a “10 year life of a typical data center” to extend the “lifetime” cost to $10,000/kW of IT load. Dividing 0.12kWh into $1,000 yields 8,333 hours, translating to slightly more than 95% uptime. http://www.apcmedia.com/salestools…

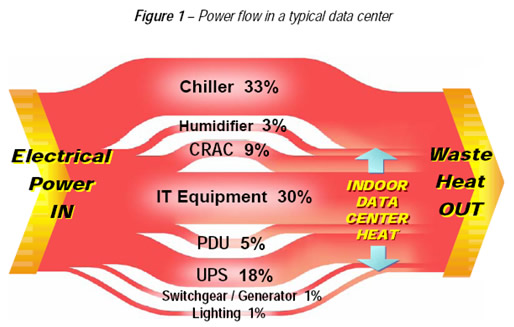

- “EPA Report to Congress on Server and Data Center Energy Efficiency,” EPA August 2007 – The graphic below summarizes some of the findings of the report that have subsequently become the basis for the PUE calculation comprehended by The Green Grid. Using these percentages, the relationship between the IT load and other energy consumers in the data center can be estimated. http://www.energystar.gov/ia/partners/prod_development/downloads…

- “EPA Report to Congress on Server and Data Center Energy Efficiency,” EPA August 2007 – Table ES-1 on page 9 summarizes the estimated efficiency gains that clients can expect to realize for various levels of efficiency programs and technologies. It ranges from 30-80%. http://www.energystar.gov/ia/partners/prod_development/downloads…

- Rasmussen, N., “Implementing Energy Efficient Data Centers,” APC, 2006 – Pages 13-14 provide range estimates for various improvements. It also provides insights into limitations of each approach and considers additional strategies.

- “Guidelines For Energy-Efficient Datacenters”, The Green Grid, February, 2007 http://www.thegreengrid.org/~/media/WhitePapers/…